#Autonomous Systems #Rover #Robotics #ICRA

Navigating the Wild: Remote Robot Control in the EarthRover Challenge

While robotics labs are full of polished systems relying on clean data and controlled environments, real world “in the wild” navigation is messier—sensor data is noisy, delayed, inconsistent, and environments unpredictable. At the EarthRover Challenge, we tested exactly this kind of problem—and our team from Tampere University came away 2nd place by building a system that can handle it.

What Was the Challenge?

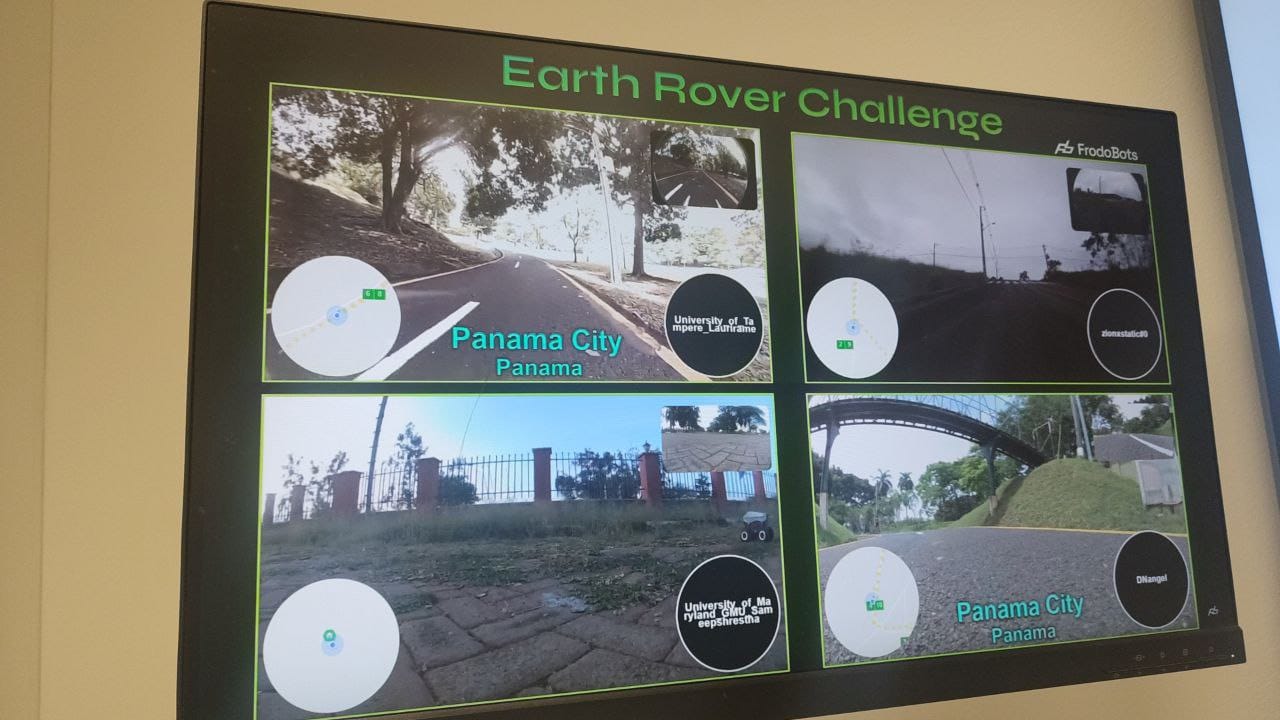

EarthRover is a remote urban navigation competition: robots located in cities across the world are given GPS checkpoints. Teams must control them remotely, often using delayed and unreliable streams of camera, IMU, GPS, and other sensors. Environments vary, mission difficulty varies (crowds, roads, sidewalks, obstacles). The goal is to be robust, safe, and generalize across seen and unseen conditions. [earth-rover-challenge.github.io](https://earth-rover-challenge.github.io)

Our Approach

We took a two-pronged strategy:

Heading Reference System (Filter-based)

To cope with inconsistent orientation and heading data, we built a filter (e.g., combining IMU and when available magnetometer/GPS) to produce a more stable and reliable heading reference. This acts as our “compass,” so the system knows which way is forward/turning.

Data-Driven Steering Model

Relying only on the heading reference isn’t enough in complex or delayed visual situations. We trained a steering policy (neural network or other regression/learning approach) that takes input from the available sensor streams (e.g., camera, delayed GPS, IMU) and outputs control commands such as turn left/right and go forward/back. The model is robust to noise, delays, and inconsistent data.

By combining the filter-based heading with the learned steering, the system adapts: when sensor data is bad, we depend more on the heading system; when visual cues or other data are good, the steering model takes more control.

Gallery

Results & What We Learned

Our system successfully navigated in several “in-the-wild” urban locations under varying lighting, traffic, pedestrian density, weather, and sensor conditions. We placed 2nd overall in EarthRover among competing AI teams.

Key lessons

- Robustness to sensor noise and delay is non-negotiable in real deployments.

- Hybrid systems (filter + learned model) outperform pure learning or pure classical methods when conditions degrade.

- Generalization to unseen environments is harder than it looks; overfitting to “nice” scenes hurts performance in the wild.

Why It Matters

Robot navigation under messy, delayed, and partial data is closer to how deployed systems in cities, disaster zones, or remote sites must work. Winning 2nd place shows that you can build systems that generalize across “unseen” environments, not just labs. For applications like delivery robots, autonomous inspection, and remote monitoring, this kind of robustness is essential.